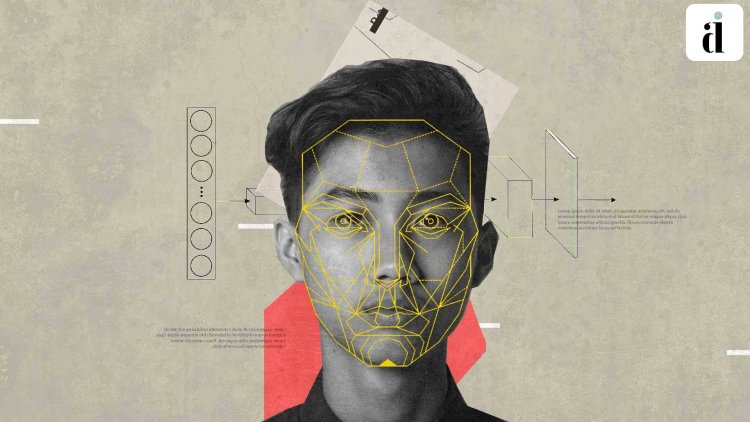

How Deep Learning Decodes Faces Into Important Characteristics Like Age

Deep learning uncontrolled studies show that the brain divides faces into semantically important characteristics, such as age.

A new study in unsupervised deep learning shows that the brain classifies faces according to semantically important factors, such as age, at one level of a neural network.

The Active Appearance Model (AAM) is essentially a built-in structure that does not follow common learning principles. Deep neural networks are a popular computational model in abdominal flow in monkeys in recent years. Unlike AAM, these models are domain independent and custom distributions are based on data-driven training. Modern deep networks are trained to perform multifaceted entity identification tasks using high-density training signals, generating multidimensional representations that closely mimic those found in biological systems.

Deep Classifiers

On the other hand, deep classifiers no longer account for the responses of individual neurons in monkey face spots and AAM. Also, the representative form of Deep Classifier and AAM is different. Whereas deep classifiers generate multidimensional multiplexed representations for many simulated neurons, AAM uses a low-dimensional coding scheme that contains orthogonal information in one dimension.

It is a long-standing belief that the visual system uses introspection to determine sensory input. Although these interpreted structures may seem deceptively simple to humans, they are difficult to reconstruct in practice due to the highly complex nonlinear transformation of the input signal at the pixel level.

Source: Unsupervised deep learning

Recent advances in machine learning have provided a blueprint for implementing this theory, suggesting generative models with deep self-control. For example, beta-variant autoencoders (VAEs) can reliably reconstruct sensory data from low-dimensional embeddings while prompting individual network blocks to encode semantically important elements such as objects, colors, faces, gender, and scene layout. It is a model to learn.

Deep Generative Models

These deep generative models continue the long legacy of the neuroscience community of generating autonomously controlled visual models, enabling powerful generalizations, imagination, abstract reasoning, constructive reasoning, and other characteristics of biological visual perception. This study aims to find out whether the overall learning goal can lead to coding similar to the coding used by real neurons. The results show that the VAE-optimized unwind target tests the mechanism by which ventral visual flow produces visible low-dimensional face images.

Conclusion

Unlike previous studies, the results show that unsupervised deep learning can intelligently process these codes at the neural level. Furthermore, this study demonstrates that the mutational axis of an individual IT neuron corresponds to a single "unwinding" latent unit. Thus, this study expands on previous studies of the coding function of single neurons in the facial macular region of monkeys demonstrating a one-to-one correspondence between model units and neurons, rather than the small agreement previously reported.

For more information, refer to the article .

Data

The raw responses of all models to the 2,162 face photos generated in this survey were pushed to the figshare repository. https://doi.org/10.6084/m9.figshare.c.5613197.v2 .

The following databases contain the face image data used in this study:

FERET face database https://www.nist.gov/itl/iad/image-group/color-feret-database ,

CVL face database

http://lrv.fri.uni-lj.si/facedb.html ,

MR2 face database

http://ninastrohminger.com/the-mr2 ,

PEAL face database

https://ieeexplore.ieee.org/document/4404053

Codes

Due to the complexity of the research and partial dependence on commercial libraries, the code supporting the results is available upon request by Irina Higgins (irinah@google.com). Additionally, VAE models, concordance scores, and UDR measures are available as open source implementations at: https://github.com/google-research/disentanglement_lib .